Invisible choices

Why don't choose our own algorithms?

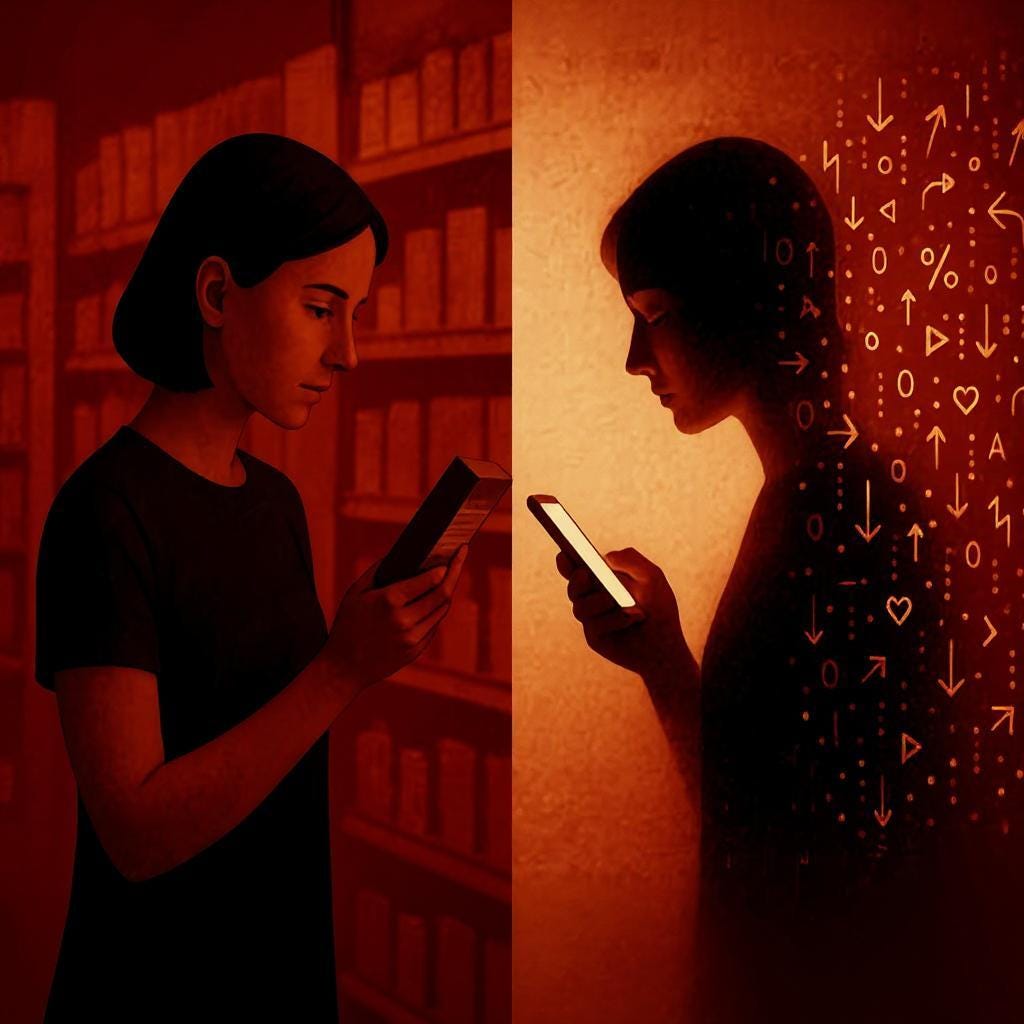

The modern consumer stands in the grocery aisle, carefully examining nutrition labels and scanning for artificial ingredients. Later, that same person will spend three hours on TikTok, completely unaware of the elaborate persuasion architecture determining every video she watches.

What if we applied the same scrutiny to our mental consumption that we bring to our physical consumption and demand transparency about what shapes our minds with the same energy and devotion we reserve for what enters our bodies?

By asking why we don't choose our own algorithms, we expose the hidden influence technology has on our autonomy and explore the concept of choice in the digital age.

I. Understanding the Algorithmic Status Quo: The Algorithm as a Silent Influencer

“Priming” is a phenomenon in cognitive psychology where exposure to certain stimuli influences our subsequent perceptions and behaviours, often without conscious awareness. A classic and famous example comes from Bargh et al. (1996), where participants exposed to aging-related words (like "grey" or "wrinkle") unconsciously walked slower afterward. This demonstrates our susceptibility to external influences.

Priming works through two principles. (1) We access only a subset of available information when making judgements, and (2) the most accessible information is most likely to be retained.

Current social media apps prime us constantly. Their design maximizes engagement and screen time. Tech companies justify this in terms of relevance: they claim that knowing us better allows them to show content that's more personalized a.k.a valuable to us. They position themselves as sophisticated matchmakers who understand our needs better than we do ourselves.

I argue that these algorithms don't merely reflect our existing preferences, they actively create and amplify them. Without our consent. This crosses a fundamental boundary of personal autonomy, a “cognitive trespassing”.

II. The Illusion of Choice in the Digital Sphere

Social media algorithms leverage priming effects at unprecedented scale and subtlety. They operate continuously, measuring and adapting to our every reaction in real-time.

The process creates an illusion of autonomy. We might believe we freely choose what to click, like and follow when we’re responding to algorithmic choices we don’t see and comprehend.

Over time, we might develop strong beliefs that feel like our own independent thoughts, but are actually shaped by repeated exposure to similar curated content. Our beliefs, preferences, and worldviews become products of algorithmic curation rather than genuine self-determination.

The asymmetry between our physical and digital consumption habits reveals a blind spot in our conception of freedom. While we carefully scrutinize what enters our bodies, we allow opaque algorithms to shape our minds without similar caution.

The question becomes not just who controls these algorithms, but why we've accepted their influence without resistance.

III. Alternative Imaginations: What Could Algorithmic Choice Look Like?

The current algorithmic landscape isn’t inevitable. It is a deliberate choice that serves mostly platforms owners. Below, I’ll explore some thinkable alternatives that return agency to the users.

1. The Algorithm Marketplace

Imagine opening up your favourite social media app to be confronted with following options:

Standard Engagement Algorithm: "The platform's default recommendation system that optimizes for maximum user engagement and time spent. It prioritizes content that will likely keep you scrolling, watching, and interacting. Most effective at predicting what will capture your attention."

Growth Algorithm: “This recommendation system will expose you to viewpoints that challenge your existing beliefs. Warning: May cause discomfort but expands your worldview.”

Wellbeing Algorithm: “Optimized for mental health rather than engagement. Limits doom-scrollng, surfaces uplifting content, and creates natural stopping and exit points”

Serendipity Engine: “Designed to break you out of predictable patterns and introduce intellectual wildcards. For those who value discovery over comfort.”

The algorithm market place illustrates that this goes beyond personalization. By requiring explicit consent to specific forms of algorithmic influence, we make visible the invisible forces shaping our perceptions and decisions.

2. Algorithms through the lense of Neuroplasticity

Neuroplasticity tells us that our brains physically rewire in response to repeated stimuli. The current algorithmic regime triggers dopamine pathways associated with addiction while neglecting neural networks tied to deep thinking, empathy, and creativity. (Source: Alter, A. (2017). Irresistible: The Rise of Addictive Technology and the Business of Keeping Us Hooked. Penguin Press.)

Alternative algorithms could be designed with neurological flourishing as their primary goal:

Contemplation Mode: Creates deliberate spaces between content to allow for reflection rather than constant consumption.

Creativity Catalyst: Monitors engagement patterns and intervenes when users fall into passive consumption, prompting active creation instead.

Connection Optimizer: Prioritizes meaningful human exchanges over viral content, strengthening neural pathways associated with social bonding.

Neuroplasticity tells us the question isn't just what content we see, but how to reshape our neural architecture through repeated exposure.

3. Cross-Cultural Algorithm Rights

Western individualism dominates tech design, but different cultural traditions offer alternative approaches to algorithmic choice:

Communal Consent Models: Drawing from indigenous decision-making traditions, algorithms could require group consent before optimizing for individual engagement

Intergenerational Impact Assessment: Inspired by the Iroquois (a confederation of indigenous tribes in North America) "seventh generation" philosophy, algorithms could evaluate how recommendation patterns might affect society decades into the future

Harmony-Centered Design: Borrowing from East Asian philosophical traditions that prioritize social balance over individual preference

This cross-cultural exploration reveals that what appears to be a neutral question of algorithmic choice actually contains embedded value systems. Current algorithms don't just reflect technical decisions but embody specific Western ideals of individualism, efficiency, and consumer choice. By considering alternative cultural frameworks, we see that the West's technological dominance isn't the only possible approach. The algorithms shaping our digital lives could be designed to prioritize community wellbeing, intergenerational responsibility, or social harmony, a set of values that might lead to fundamentally different online experiences and outcomes.

4. Open-Source Algorithmic Governance

The most radical alternative would be to participate in algorithm creation:

Algorithm Cooperatives: User-owned collectives that develop and maintain recommendation systems aligned with member values rather than profit motives

Algorithmic Juries: Randomly selected panels of users who review and approve major changes to recommendation systems before deployment

Open source alternatives could transform users from passive consumers to active governors of their information environments.

IV. The Choice Paradox

Which algorithm would you choose? And how often would you change it? Would you choose one that challenges you or comfort you?

Critics will argue that most users don’t want this choice. They’d rather have decisions made for them. This reveals the central tension: freedom requires both autonomy and responsibility.

By envisioning alternatives, we begin to understand how deeply our current digital reality is constructed rather than inevitable. Confronting algorithmic opacity matters precisely because it helps us reclaim autonomy and critically acknowledge how technology shapes society beyond our devices.

Conclusion:

The question of choosing our own algorithms is ultimately about recognizing and making visible the invisible frameworks that shape our lives. While Big Tech has little incentive to introduce alternative algorithmic choices, we can begin by demanding transparency in how these systems operate.

Perhaps the first step toward genuine digital autonomy isn't selecting algorithms, but insisting on visibility into how they're already selecting us. Only by understanding the hidden architecture of influence can we meaningfully assert our right to choose what shapes our minds—with the same vigilance we apply to what enters our bodies.