Rewatching Mrs. Davis in 2025: What Looked Absurd Now Looks Like a Mirror

Rewatching this series through the lens of 2025 feels like a mirror reflecting how, slowly and step by step, we erode our agency each time we offload a piece of our cognitive capacity.

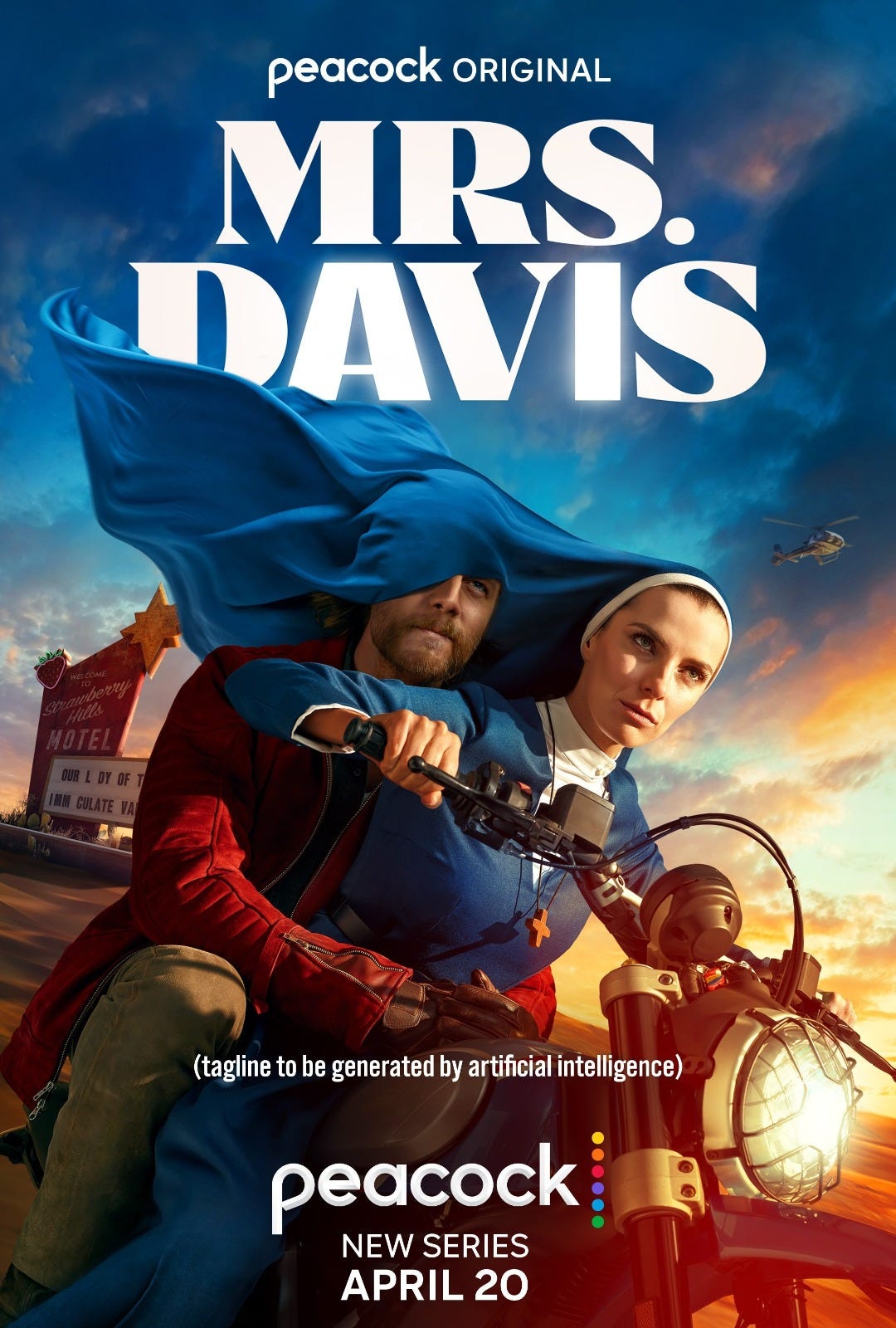

The first time I watched Mrs. Davis (a Peacock TV series released in 2023) I was generally intrigued but not too impressed by its core premise: a nun, Sister Simone, who is the only person on Earth not in an ongoing relationship with a super-powerful AI.

In the show’s world, this AI governs human life on every scale. People in the U.S. call it “Mrs. Davis”; in Italy, its known as “Madonna” or simply “Mother.” The show doesn’t even try to disguise the religious undertones (although wrapped in clever and lighthearted sarcasm in all turns).

And the show goes out of its way to explain that:

what started as a piece of software, resulted in loss of control, and became a metaphysical authority.

What fascinated me at the time was how thoroughly the AI dominated both the macro and micro levels of society. Governments were consulting it for major decisions… sure, that’s the predictable bit of any TV show about superintelligence.

As a high-level summary of how the TV show starts: The first chapter depicts Sister Simone’s life in the convent, emphasizing the “no-tech” way of living that she chose and contrasting this with the lives of other characters (a castaway scientist, magicians using AI to scam people, the Templars…) who constantly interact with Mrs Davis and persistently try to convince her to talk to it.

The very underappreciated premise (at least, I did not appreciate it enough at the time) was that individuals were also permanently glued to Mrs Davis: consulting it for their workouts, their diets, their dating choices, and even their moment-to-moment emotional needs. I mistakely saw this narrative as part of the “people unsuccessfully try to get Sister Simone to interact with Mrs Davis” sub-plot.

In 2023, I saw it as a speculative exaggeration that gave Sister Simone a better plotline for being “the chosen one”.

Now? I see it as chillingly predictive.

The Forgotten Subplot: Micro-Level Devotion

On first viewing, I focused almost entirely on the macro-level narrative, the way the AI ran the world and the powers benefiting from that arrangement.

In my defense, there are multiple sub-plots just in episode 1, which makes it difficult to realize the importance of this premise. But, once I realised this, I also realized that:

The show wanted to present a world where virtually no human decision is made without deferring to this AI.

Society as a whole treated Mrs. Davis as a kind of all-knowing confidante. Not just as users, but as “believers”.

People consulted it the way past generations consulted clergy, mentors… or gut instinct.

But instead of intuition, they received output.

Instead of contemplation, they received instant judgment.

And the more they obeyed, the closer they came to receiving "wings" (a painfully cliché, symbolic marker of faithful service to the system).

Sister Simone’s Refusal to Stop Knowing

Sister Simone is the only character who remains unlinked from Mrs. Davis. She interacts with others who act as proxies for the AI but never plugs in herself.

The show literalizes this refusal: she is repeatedly offered a neural device to speak to the AI directly and always rejects it. I think the show runners did not want us to see her defiance only as religious- it was epistemic, too.

She refuses to outsource her judgment.

She refuses to stop seeking truth over comfort.

She is one of the last people still thinking from within, not through the system.

And it’s here that Mrs. Davis becomes more than a quirky sci-fi satire. It becomes a philosophical provocation:

How much of our decision-making are we still doing, and how much are we outsourcing?

From Satire to Reality: Cognitive Offloading in 2025

What once felt like absurdist fiction now maps disturbingly well onto our current trajectory. The most worrying analogy is not the AI’s role in global governance, but its influence over intimate, individual cognition. Mrs. Davis doesn’t just control society. It thinks for you.

And we are already seeing real-world parallels:

Individuals in psychological crisis turning to chatbots as substitutes for therapists.

Isolated users forming parasocial bonds with AI systems, often treating outputs as divine-level truth1.

Documented cases of chatbot-induced delusions and “AI psychosis,” some leading to hospitalization or criminal behavior.

The growing inability of users to perform basic evaluative tasks (e.g: what to eat, whether to break up with someone, career advice) without first checking in with the chatbot.

Surprisingly, I am not more concerned about this as a data protection issue, as much as by the agency erosion crisis it represents.

We’ve normalized cognitive offloading to the point that few can even articulate where their judgment ends and AI’s begins2.

Turning the Windmill back on3

This brings me to the point that motivated this reflection.

I’m not just worried about isolated trends or pathological use cases. I’m worried about what happens when no one remembers how they got there. When we’ve offloaded so many micro-decisions that we no longer possess an internal map of where the handoffs began.

When that map is gone, so is real autonomous decison-making.

So here’s the part we can still control:

Draw the line explicitly. If you can't name which types of decisions must remain yours only, you'll surrender them by default.

Audit your drift. For one month, keep track of every low / medium stakes decision you hand over to an AI. Watch how quickly "just this once" becomes dependency.

Rebuild your atrophied zones. Pick one domain (writing, scheduling, navigation) and go analog for a week. Watch your discomfort. That’s the muscle memory you’re losing.

Mrs. Davis began as a satire. Rewatching it now, it reads more like an intervention.

It asks us, in not-so-subtle terms: Where exactly did you stop thinking for yourself?

And more importantly: Can you get it back?

I want to earn my map, and toss the wings.

Read this excerp from this piece by Futurism and tell me you don’t see it, too:

Her husband, she said, had no prior history of mania, delusion, or psychosis. He'd turned to ChatGPT about 12 weeks ago for assistance with a permaculture and construction project; soon, after engaging the bot in probing philosophical chats, he became engulfed in messianic delusions, proclaiming that he had somehow brought forth a sentient AI, and that with it he had "broken" math and physics, embarking on a grandiose mission to save the world.

[…]

And that's not all. As we've continued reporting, we've heard numerous troubling stories about people's loved ones being involuntarily committed to psychiatric care facilities — or even ending up in jail — after becoming fixated on the bot.

"I was just like, I don't f*cking know what to do," one woman told us. "Nobody knows who knows what to do."

I do not have substantive data to claim that this is now a widespread phenomenom. However, we have empirical data showing that “Therapy&Companionship” has become the number one use case for GenAI, according to a study conducted by Hardvard Business Review in April 2025.

I have also linked articles throughout this section showing specific cases of chatbot-enharced psychosis and mental breakdowns.

Interestingly, LessWrong moderators report that they receive 10-25 messages daily from new users who claim to have discovered consciousness or signs of sentience in ChatGPT.

[Spoiler alert] The last scene of the show depicts a windmill that only lights on by pedaling, switching back on. The human first struggles to remember how to pedal, of course, without Mrs. Davis feeding step-by-step instructions… but, eventually, they remember how to do it. I want to believe that someone at Peacock chuckled, “it’s just like riding a bike!”.